This post is part of Lifehacker’s “Exposing AI” series. We’re exploring six different types of AI-generated media, and highlighting the common quirks, byproducts, and hallmarks that help you tell the difference between artificial and human-created content.

AI art isn’t just a concept anymore: It’s everywhere, and you’ve likely encountered it in the wild, whether you knew it or not. AI art has won awards (albeit amid controversy), been used in Netflix films (again, facing criticism) and has tricked untold numbers of Facebook users with false images of babies, Jesus, and vegetables. It’s not going anywhere anytime soon.

As the technology for generating images continues to improve, it’s more important than ever to learn how to spot these AI-generated images, so you aren’t fooled by someone else’s fake photos. It may seem harmless to not think twice about an AI-generated image of some ducks, but when bad actors spread disinformation through AI images, the implications are dire. Companies that make AI image generators are working on ways to “watermark” AI-produced images, but we’re just not there yet. Most AI art online is circulating without a clear label warning users the images are not real. In lieu of that, these tips can help you spot these fake images when they pop up in your feed.

How AI art generators work

It’s easy to take a look at a piece of AI art and assume the generator that produced it simply stitched the piece together from a bunch of images in its database. That’s not really what’s happening here, though.

AI art generators are indeed trained on huge datasets of images, from art to photographs. However, these tools cannot “see” these images the way humans do. Instead, they break these images down pixel by pixel. It won’t see an apple; it will see a group of pixels and their values. Overtime, it will learn that this grouping of these particular pixel values tends to be an apple. Train the AI on enough images with enough context, and it will start to draw lines among all kinds of objects and styles. It’ll understand how to generally render fruit, animals, and people, as well as art styles, colors, and moods.

Modern AI image generators, like DALL-E, use what’s known as diffusion to learn and generate images. Essentially, they take a training image, and add visual noise (think static) to the image until the entire picture is meaningless. The idea is to understand how the image is affected by adding noise at each step: From here, it tries the opposite, creating its own version of the original image from nothing but noise, which trains the AI to create images from scratch.

This is an extremely simplified explanation, but it’s important to know the fundamental idea that these generators are drawing on a huge database of learned relationships. That makes it possible to render complex scenes in seconds, but it’s also what results in the weird quirks that can help us identify an AI image from a real one.

Count the fingers

AI art is getting better, but it still isn’t perfect. While these tools are capable of generating high-quality images with realistic lighting, they still struggle with smaller details.

Perhaps most famously, “human” subjects in AI art tend to have the wrong number of fingers. Maybe the rest of the image seems convincing on first glance, but look at the hands, and you’ll notice six, seven, or eight fingers on each. Or maybe it’s the opposite, and the hand has three fingers, two of which are morphing into one. In any case, fingers and the hands they’re attached to are often messed up.

But while fingers are what AI is most famous for getting wrong, its issues don’t stop at the hands. Any repeating patterns, especially when it concerns details with humans, can be generated incorrectly with AI art. Take a look at a subject’s teeth in an image: Sometimes, there are way too many, or look distorted in a way they never normally would be. Most of us don’t have perfect smiles, but AI teeth are on another level.

You may even see a subject with an extra limb: You’ll be looking at an image, wondering what seems off about it, when all of a sudden you notice the subject’s third arm coming out of their sweatshirt.

Vox has a great video exploring why AI struggles with these repeating elements, but, essentially, it comes down to a lack of experience on the AI’s part. These tools are trained on a huge amount of data, but when it comes to something complicated, like hands, the data it does have doesn’t provide enough context for the bot to know how to properly and realistically generate the element. It doesn’t know how hands actually work—it can only pull from the hands it does see. We’re experiencing the limits of that knowledge here.

Watch for blending elements

Look at enough AI art, and you’ll notice something weird: Things blend and morph into each other all over the place. I already mentioned this happens with fingers, but it can happen with many other elements of the subject, including teeth morphing into other teeth, clothing blending into itself, and eyes that seem to bleed into the other parts of the subject’s head.

But it’s not just the subjects: Everything in the image is fair game for this blending. Look at the image I generated with DALL-E below. The board game is wavy, with parts of the board morphing into other parts, and pieces blending into the tiles. The woman on the right’s teeth are all squishing together, while the other woman’s sweater cuffs are folding into themselves. (Not to mention, her fingers are blending into one.)

Prompt: “Generate an image of a group of friends smiling at each other while playing a board game.”

Credit: Jake Peterson/OpenAI

Critique the writing

AI might be able to generate text, but it often can’t write well in an image. In many cases, AI art that incorporates writing will look off. Sometimes, it’s a logo that resembles its real-world counterpart, but doesn’t get all the way there. (You can tell it’s trying to say Coca-Cola, but all the letters are getting jumbled together.) Other times, it looks like someone trying to make up a language, or how it feels to try to read something in a dream. (In fact, a lot of these AI images give off a dream-like quality, at least to me.)

Now, this side of AI art is rapidly improving. Both DALL-E and Meta AI were able to generate an image of a cake that reads “HAPPY BIRTHDAY KAREN” without any horrendous issues. That said, Meta’s wasn’t perfect: The second “P” in “HAPPY” looked more like a “Y,” while the “A” in “KAREN” had two lines through it rather than one. But it’s important to note that these images come out better when you specifically prompt the AI to write them out: When left to its own devices, writing often looks odd, so if whoever created the image didn’t think to fix it, it can be an obvious tell.

Ask DALL-E to generate a map, and it does to some degree of accuracy, albeit with “AI writing.”

Credit: Jake Peterson/OpenAI

Look for things that just don’t make sense

At the end of the day, AI art doesn’t really know anything. It’s producing art based on the relationships it’s forged from all its training data. It doesn’t know how a building is really supposed to be constructed, how tennis is played, or how a human hand moves. It pulls from its training to replicate these requests to the best of its abilities. If you look closely enough, you can see these gaps in knowledge appear throughout AI artwork, especially in pictures with a lot going on.

Take this image, for example: I asked DALL-E to generate an image of a basement party, with people playing beer pong, drinking out of red Solo cups, and chatting. Right off the bat, there are some major issues: The eyes of the people in frame are mostly off; the hand on the man playing beer pong is messed up; and why is he throwing ping pong balls from the side of the table? Speaking of the table, it’s wavy and warping in a way a real table wouldn’t, and there are two sets of cups on one side of the table for some reason.

Look toward the background, and things get even weirder. A man appears to be kneeling, drinking his red cup as if it were a bottle. The man behind him appears to have a blue can inside his red cup, as if the cup were a koozie. The person to the back of him appears to have their face Photoshopped on, blurry as the subject is.

Prompt: “Generate an image of a basement party: People play beer bong, drink from red solo cups, and chat.”

Credit: Jake Peterson/OpenAI

Even official examples from the companies that make these tools have these logical inconsistencies. OpenAI has a funny image of an avocado at therapist to show off DALL-E 3.

Credit: OpenAI

The avocado has a scooped-out pit, and is complaining to the therapist, who is a spoon, about feeling empty inside. It’s a decent bit, but look at the therapist’s notepad: They’re writing on it backwards, with the pages facing out. DALL-E has seen enough therapist images to know what tools they usually write notes on, but doesn’t understand we humans usually write on the paper, not the clipboard.

Of course, the AI posts that go viral on social media sites like Facebook often don’t make sense at all. Who is building cat sculptures out of Oreos? Why are there so many sad artists making sandcastles of Jesus? Art can be imaginative, but AI art is particularly odd, in both the smaller details and the larger themes.

AI sheen

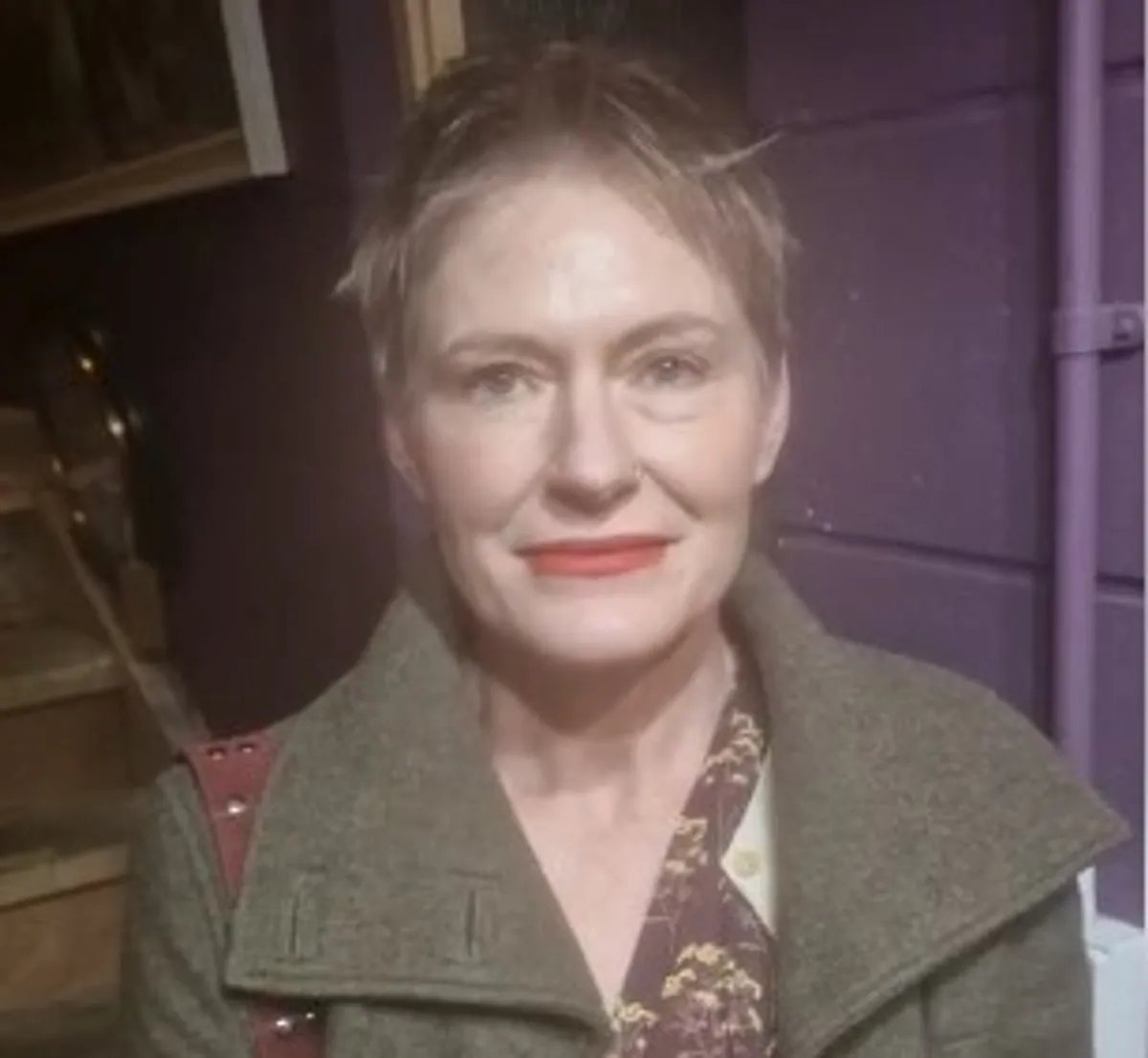

After looking at AI images for a while, you start to notice something peculiar, especially among the photorealistic pictures: Everything is shiny. AI images often have what some call “AI sheen,” a shininess that can give away the origins of the picture if you know what you’re looking for. Images are often overexposed, or feature dramatic lighting, which makes subjects particularly bright.

After a while, you look at a photo like the one below and instantly know it was made with AI, just by the way it looks. (Even if the subject’s hands weren’t off, as well.)

Prompt: Generate a photorealistic image of a woman holding a baseball with two hands.

Credit: Jake Peterson/OpenAI

Employ a healthy level of skepticism

While these tips might be relevant today, AI tech is continuing to advance and improve, and may render these tips useless in the near future. AI is already better at writing, so who’s to say it won’t figure out a way to generate realistic hands every time? Or to stop elements in the photo from blending together? Or to stop adding weird shit to the backgrounds of images? And while the tips above are currently useful for photorealistic images, it can be more difficult to identify artwork generated by AI: These pieces can have the same flaws as noted above, but it’s easier to mask those inconsistencies with “paint,” which is often blended, less realistic, and more open to interpretation.

As we head into a particularly turbulent election year, it’s going to be more important than ever to turn up your BS radar as you browse the internet. Before you’re impressed by someone’s intricate artwork, or enraged by an offensive image, think twice: Is this image even real?