OPINION: Adobe recently unveiled the newest and most advanced version of its Firefly generative AI model, Firefly Image 3, but could this update trigger an increase in art thefts and imitation?

Currently in its beta phase, the Firefly Image 3 model promises some significant upgrades for both Firefly and Photoshop.

What’s new?

Firefly is now better at understanding prompts and is more skilled at creating images when it comes to both structure and detail. The Image 3 model can also produce a more varied range of human emotions and draws inspiration from a broader range of art styles.

Photoshop’s Generative Fill gen-AI tool has also received a substantial Firefly-powered update, with new features including Reference Image, Enhance Detail, Generate Background, and Generate Similar.

Reference Image is the tool that immediately caught my attention, allowing users to upload existing images as prompts rather than relying on a short textual description. During a demo with Adobe, I watched as a guitar was uploaded as a Reference Image prompt. Firefly then took heavy inspiration from that image, placing a near-identical guitar in the arms of the bear already open in Photoshop.

Used for evil

This technology is very impressive, making it possible to convey a specific shape, style, and colour item to Generative Fill without typing a word. However (and perhaps this says something about me), I was immediately struck with how this feature could be used for evil.

Art theft is already a pervasive problem online with people reuploading and claiming work as their own, whether for money or likes. Couldn’t this feature be used to generate art similar to a piece that’s already out there?

I took these concerns to CTO of Digital Media Business at Adobe, Ely Greenfield, and this is what he told me.

“The concept of whether an artist has a right to their style is a complicated one”

“Our perspective at Adobe is that an artist should have a right to their style and we work with regulators, we’re promoting various legislation in the US right now, I think, to try and actually get laws passed to support that. What I’ll say is yes, you could upload an image as a style match, as a reference match, and the whole point is to be able to use that as a source, as a seed, to be able to generate other things like that”, explained Greenfield.

“There’s a certain degree of responsibility that we put on the user. I mean this is like anytime you’re using any editing tool, even a pencil, you have the ability to copy somebody else’s work and so we can’t stop people from doing that. We do everything we can in the tool to try and help encourage people to act with integrity. We remind people to make sure they have the rights. We check for content credentials to see if there’s a flag on it that says please do not use this with AI, but at the end of the day, yes, you can upload somebody else’s content and use it even when you don’t necessarily have the rights and permission to do it”.

Could someone make money from your art style?

I asked Greenfield if there are any systems in place to prevent or flag images that have been generated from another piece of art from being uploaded to print-on-demand websites, such as Redbubble or Amazon.

“There’s no way for us to validate whether you have a right to an image, and there’s no way for us to track what you do with that image after the fact. So unfortunately, no, there’s nothing we can do about that”, he said.

“The best we can do, and a lot of this is our half of our investment with the Content Authenticity Initiative, half of it is technical. How do we create the systems that allow us to track this information? And half of it is societal, social, political. We work with regulatory agencies, we work with other media and technology companies, hardware companies, software companies, to try and get the technology out there, so that we can, over time, change the expectation of the public”.

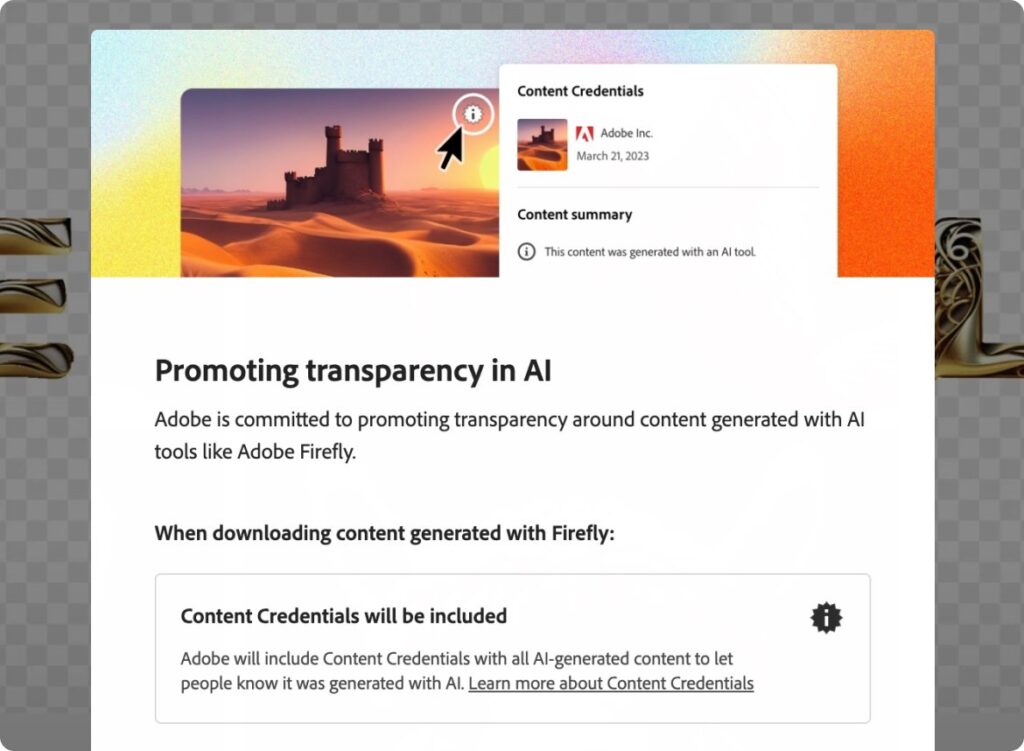

The Content Authenticity Initiative

The Content Authenticity Initiative is a collective of media and tech companies that are working together to promote the adoption of an open industry standard for content authenticity. One way Adobe has been doing its part has been by marking images created or edited using AI with its Content Credentials metadata.

“[One] analogy I like to use is the lock icon on the browser where we got to the point where eventually people started to expect that lock icon and, if it wasn’t there, they were suspect of the website, wouldn’t put their credit card in. Now, we’re getting close to the point where now the browser is starting to say, if it’s not there we’re just going to not let you see the website. You can work around it, you can say I want to take the risk, but more and more the browser is saying [no].”, explained Greenfield.

“I’d love to see us get to that point with Content Credentials, but it’s definitely a journey to get from here”.

Is generative AI bad for artists?

This doesn’t mean Adobe is against generative AI. You only need to spend five minutes in Adobe Firefly to realise that Adobe is one of the frontrunners in this race.

“I actually don’t think manipulation is inherently a bad thing, and AI isn’t inherently a bad thing”, said Greenfield when asked if Adobe’s vision for the future was an internet in which every image is flagged if it has been manipulated using AI.

“If we wanted to flag any image where AI was used in the making of the image and put a big warning label on it, every photo that came out of an iPhone would be flagged because AI is used in the rendering, in the capturing of the image. It is not actually the raw luminosity values that the sensor is seeing. Apple actually has AI, wonderfully. It does things like detect faces and brighten them up because that’s what people want to see in their photos. 99.999% of the use cases of people using generative AI in Photoshop are perfectly legitimate reasons… they’re either making changes that don’t distort truth, or they’re not trying to represent truth”.

“I don’t think we end up in a world where we’re getting warning signs left and right, like AI was used on this. I think we end up in a world where when a consumer says, oh this person is representing truth, or we could have situations where the AI can help detect is this an editorial story, is this meant to be representing truth, and that information is surfaced in those situations so the consumer can choose to go and make a choice”.

What is Adobe’s end goal with Content Credentials?

“Our job is to, number one, educate the public that we all should be paying attention to this when it matters, and number two, make it drop dead easy, stupid easy, for people to then go and actually get the information they need and understand how to put that information to work when they decide it’s important to them. That’s the vision of the future”.